Fully automated, almost free backups

One of our clients was recently burned by their hosting company not providing the backups they expected. As we helped transition them to a new system, I focused on setting up solid backups and am very pleased with the results: Fully automated, offsite backups for under $2/month.

Free Backups on Heroku

If you’re on Heroku you already get backups for free, but you have to remember to install the free PgBackups addon. Seriously, there’s no reason no to do it. You get: “Daily automatic backups. Retains 7 daily backups, 5 weekly backups, and 10 manual backups” all for free!

Move them Offsite.

Next, I wanted another copy somewhere else. So I used another free Heroku addon, Scheduler to setup a task to do this once per day (use cron for your non-Heroku apps). For a task, I used the handy pgbackups-archive gem and ended up with a task that looks like:

rake pgbackups:archive && curl https://nosnch.in/c2354d53d2

What’s with the curl at the end? I’m using the excellent Dead Man’s Snitch to notify me if the backup doesn’t run! Brilliant.

The task moves them to an Amazon S3 bucket[1], and I made it on the west coast, since all of Heroku is on the east coast.

Archive in Glacier

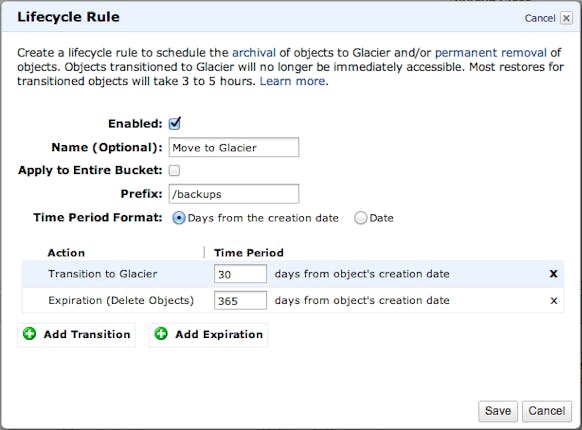

Next, I’m using a new feature of S3 to automatically move backups to Amazon Glacier. Glacier is a fraction of the cost of S3, and while it makes it slower when we need to retrieve data, that’s a tradeoff we’re willing to make. Here’s my setup: I move each file to Glacier after 30 days, and then delete it after 365 (we don’t need to keep backups longer than that).

What does it cost?

If automating this wasn’t enough, doing the math made me smile. A full database backup is around 180 MB[2], so storing 30 days on S3 will cost ~82¢/month. Storing a year’s worth of backups on Glacier is ~62¢/month. That gives us a whopping $1.44/month and would be cheaper if we moved to Glacier quicker!

What else?

An automated system is great, but you have to test it. For this system, pull a copy out of Glacier, and try to restore it. Know how to do it, and how long it takes. Have a plan. But for getting the backups, this setup is quick and easy. Also, consider storing a copy somewhere else. Locally, or another cloud service. I have thoughts on automating this too[3], but don’t have anything set up quite yet.

[1] For extra security, I used Jason’s post to make an Amazon IAM user that only has access to my backup bucket, and therefore can’t do anything bad, like delete the backups.

[2] Backups made with pg_dump (as Heroku’s are) are routinely 1/10th the size of your database. This fits here, the data in postgres is ~ 2 GB (and a MySQL dump was 1 GB).

[3] Could we use the DropBox API to upload files which then get synced to people’s local computers?

Comments

I save backups of my WordPress sites to the host’s web server and offsite to a cloud storage service. I’ve considered using Amazon S3 or Glacier, so thanks for sharing numbers.

I didn’t know about the dead man’s snitch, that’s awesome, thanks.

Topher: After setting up my first Snitch, I fell it love with the app. So simple, but solves a huge problem.

Of course, S3 prices just dropped today by 25%, so your backups just got cheaper! http://techcrunch.com/2012/11/28/amazon-web-services-drops-storage-pricing-about-25/

Could you eventually detail the restore from glacier operation?

Cheers